Indus Appstore Private Limited

(Formerly known as ‘OSLabs Technology (India) Private Limited’)

CIN - U74120KA2015PTC174871

Registered Address:

Office-2, Floor 4, Wing B, Block A, Salarpuria Softzone, Bellandur Village, Varthur Hobli, Outer Ring Road, Bangalore South, Bangalore, Karnataka, India, 560103

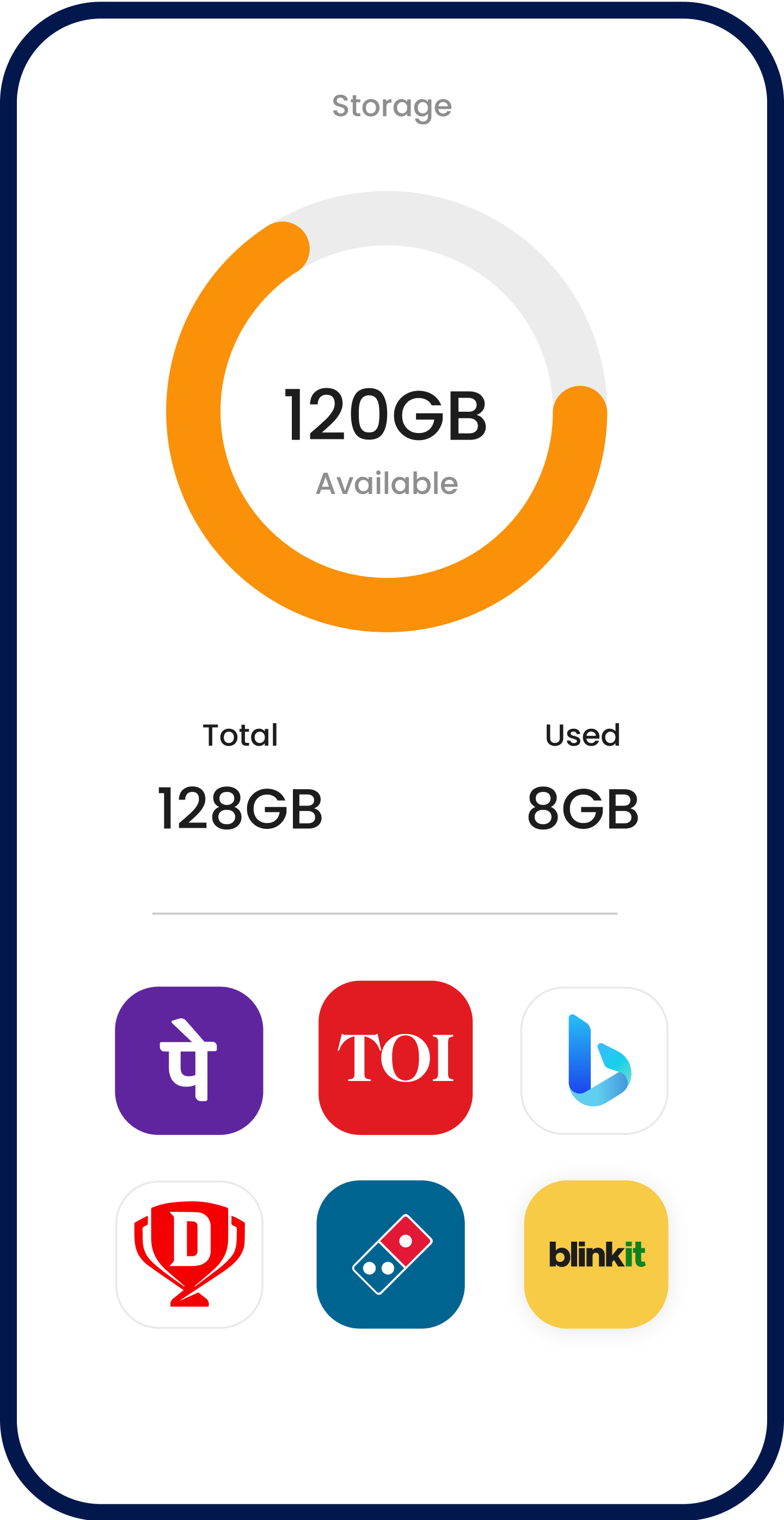

Disclaimer: All trademarks are property of their respective owners.

For any questions or feedback, reach out to us at [email protected]